CaseWhat is a fast way to load a Slowly Changing Dimension or Persistent Staging Area in SSIS. When using a Data Flow Task for this, the process could become very slow when there are a lot of updates.

Solution

Instead of using the OLE DB Command for updating records you could load all those records to a temporary table and then use a batch update command to update all records in the target table with the values from the temporary table.

An even fancier

The MERGE statement will update the existing record in the destination table, but it can output the old version of the updated record. You can then use this output to do an insert on the destination table.

First create a source and destination table for testing purposes:

The destination table is a Slowly Changing Dimension, but it could also be a Persistent Staging Area. It has the same columns as the source with a few extra columns:

And now the MERGE script. Below I will try to describe each section that starts with a comment and number: /***** 1 *****/.

Testing the script:

Second test:

I use this script primarily for the Persistent Staging Area. When you want to use it for an SCD you have to reload the facttable because the dimension ID changes. The fact pointing to ID 4 with David's old salary now points to the record with David's new salary.

The alternative script below could be a solution for that. Instead of comparing the key columns, I compare the CHECKSUM (or HASHBYTES) of all columns and remove the WHEN matched part (if the checksum matches, then we don't have to do anything). The benefit of this is that the dimension ID never changes. A second benefit is that you don't need to know the key columns. One downside is that CHECKSUM may not be unique and the HASHBYTES can only handle 8000bytes and can't compare NULL values. So the script below is NOT yet foolproof!!! Will work on that, but let me know if you have a solution.

Testing the script:

Second test:

Solution

Instead of using the OLE DB Command for updating records you could load all those records to a temporary table and then use a batch update command to update all records in the target table with the values from the temporary table.

An even fancier

The MERGE statement will update the existing record in the destination table, but it can output the old version of the updated record. You can then use this output to do an insert on the destination table.

First create a source and destination table for testing purposes:

-- Drop if exist

IF OBJECT_ID('dbo.Employees', 'U') IS NOT NULL

DROP TABLE dbo.Employees;

-- Create source table

CREATE TABLE [dbo].[Employees](

[EmployeeNumber] [varchar](5) NULL,

[FirstName] [varchar](50) NULL,

[LastName] [varchar](50) NULL,

[DateOfBirth] [date] NULL,

[Salary] [money] NULL

);

-- Insert test records in source

INSERT [dbo].[Employees] ([EmployeeNumber], [FirstName], [LastName], [DateOfBirth], [Salary]) VALUES (N'00001', N'John', N'Williams', CAST(N'1972-02-15' AS Date), 5100.00);

INSERT [dbo].[Employees] ([EmployeeNumber], [FirstName], [LastName], [DateOfBirth], [Salary]) VALUES (N'00002', N'Jane', N'Smith', CAST(N'1965-09-02' AS Date), 4900.00);

INSERT [dbo].[Employees] ([EmployeeNumber], [FirstName], [LastName], [DateOfBirth], [Salary]) VALUES (N'00003', N'Marc', N'Brown', CAST(N'1981-12-01' AS Date), 3300.00);

INSERT [dbo].[Employees] ([EmployeeNumber], [FirstName], [LastName], [DateOfBirth], [Salary]) VALUES (N'00004', N'David', N'Garcia', CAST(N'1975-01-01' AS Date), 3700.00);

-- Drop if exist

IF OBJECT_ID('dbo.DimEmployee', 'U') IS NOT NULL

DROP TABLE dbo.DimEmployee;

-- Create destination table

CREATE TABLE [dbo].[DimEmployee](

[EmployeeID] [int] IDENTITY(1,1) NOT NULL,

[EmployeeNumber] [varchar](5) NULL,

[FirstName] [varchar](50) NULL,

[LastName] [varchar](50) NULL,

[DateOfBirth] [date] NULL,

[Salary] [money] NULL,

[Active] [bit] NULL,

[DateFrom] [datetime] NULL,

[DateEnd] [datetime] NULL

);

The destination table is a Slowly Changing Dimension, but it could also be a Persistent Staging Area. It has the same columns as the source with a few extra columns:

- EmployeeID is the dimension id it's populated by the identity setting. You could skip this column for the Persistent Staging Area.

- Active: This is a Boolean to quickly filter all active records. It's a bit redundant, but also easy.

- DateFrom: This is the datetime to indicate the insertion of this record. It never changes.

- DateFrom: This is the datetime to indicate when this record was inactivated. I use NULL for active records, but you could also use a future date like '9999-31-12'

And now the MERGE script. Below I will try to describe each section that starts with a comment and number: /***** 1 *****/.

--Merge script

/***** 6b *****/

INSERT INTO DimEmployee

(EmployeeNumber

, FirstName

, LastName

, DateOfBirth

, Salary

, Active

, DateFrom

, DateEnd)

SELECT MergeOutput.EmployeeNumber

, MergeOutput.FirstName

, MergeOutput.LastName

, MergeOutput.DateOfBirth

, MergeOutput.Salary

, 0 -- InActivate the record

, MergeOutput.DateFrom -- Keep the old from date

, GETDATE() -- Close the record

FROM (

/***** 1 *****/

MERGE DimEmployee as T -- Target

USING Employees as S -- Source

ON T.EmployeeNumber = S.EmployeeNumber -- Compare key

AND T.Active = 1 -- Only compare open records

/***** 2 *****/

WHEN NOT MATCHED BY TARGET THEN -- Not found in destination

INSERT

( EmployeeNumber

, FirstName

, LastName

, DateOfBirth

, Salary

, Active

, DateFrom)

VALUES

( S.EmployeeNumber

, S.FirstName

, S.LastName

, S.DateOfBirth

, S.Salary

, 1 -- Activate the record

, GETDATE()) -- Open the record

/***** 3 *****/

WHEN NOT MATCHED BY SOURCE -- Not found in source

AND T.Active = 1 THEN -- Only compare open records

UPDATE

SET T.Active = 0 -- Inactivate record

, T.DateEnd = GETDATE() -- Close date

/***** 4 *****/

WHEN MATCHED -- Found in source and destination

AND T.Active = 1 -- Only compare open records

AND EXISTS (SELECT S.FirstName

, S.LastName

, S.DateOfBirth

, S.Salary

EXCEPT

SELECT T.FirstName

, T.LastName

, T.DateOfBirth

, T.Salary) THEN

UPDATE

SET T.FirstName = S.FirstName

, T.LastName = S.LastName

, T.DateOfBirth = S.DateOfBirth

, T.Salary = S.Salary

, T.Active = 1 -- Make record active

, T.DateFrom = GETDATE() -- Open record with current datetime

, T.DateEnd = null -- Keep record open

/***** 5 *****/

OUTPUT $action as MergeAction, Deleted.*, Inserted.Active as NewActiveCheck

/***** 6a *****/

) as MergeOutput WHERE MergeAction = 'UPDATE' and NewActiveCheck = 1;

- In this section you provide the name of the target and source table and which key to use to compare those records. I also added a filter on active to only compare open records. You could replace it by T.DateFrom is null.

- This section is for new records. Source records that are not found in the destination will be inserted with Active set to 1 (true), the FromDate set to now and the EndDate set to null.

- This section is for deleted records. Active destination records that are not found in the source are deactived and closed by setting the EndDate. Other columns remain unchanged.

- This secion is for active updating record with new values. To prevent unnecessary updates I have added EXISTS-EXCEPT part. This is a very handy way to compare all (non key) columns for changes and above all it can even compare NULL values.

- This is the last part of the MERGE statement and it can output the old and new values of deletes, updates and insertions. In this case I'm interested in the old values of the changed records (Deleted.* or Deleted.column1, Deleted.column2, etc). I also output the Active column from the new record to filter inactivated records (deleted records from the source shouldn't be inserted again). The $active indicates whether this is an 'INSERT', 'UPDATE', or 'DELETE'.

- In 6a I filter on the action to only keep the old values of the updated records. In 6b I insert a new record with the old values of the changed records. I inactivate the new record and I set the EndDate to close the new record. Other columns remain unchanged.

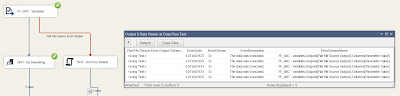

Testing the script:

|

| One update |

Second test:

|

| One update, one delete and one insert |

I use this script primarily for the Persistent Staging Area. When you want to use it for an SCD you have to reload the facttable because the dimension ID changes. The fact pointing to ID 4 with David's old salary now points to the record with David's new salary.

The alternative script below could be a solution for that. Instead of comparing the key columns, I compare the CHECKSUM (or HASHBYTES) of all columns and remove the WHEN matched part (if the checksum matches, then we don't have to do anything). The benefit of this is that the dimension ID never changes. A second benefit is that you don't need to know the key columns. One downside is that CHECKSUM may not be unique and the HASHBYTES can only handle 8000bytes and can't compare NULL values. So the script below is NOT yet foolproof!!! Will work on that, but let me know if you have a solution.

--Alternative Merge script with checkum or hashbytes

/***** 6b *****/

INSERT INTO DimEmployee

( EmployeeNumber

, FirstName

, LastName

, DateOfBirth

, Salary

, Active

, DateFrom

, DateEnd)

SELECT MergeOutput.EmployeeNumber

, MergeOutput.FirstName

, MergeOutput.LastName

, MergeOutput.DateOfBirth

, MergeOutput.Salary

, 0 -- InActivate the record

, MergeOutput.DateFrom -- Keep the old from date

, GETDATE() -- Close the record

FROM (

/***** 1 *****/

MERGE DimEmployee as T -- Target

USING Employees as S -- Source

ON CHECKSUM(S.EmployeeNumber + '|' + S.FirstName + '|' + S.LastName + '|' + CAST(S.DateOfBirth as varchar(10)) + '|' + CAST(S.Salary as varchar(20))) =

CHECKSUM(T.EmployeeNumber + '|' + T.FirstName + '|' + T.LastName + '|' + CAST(T.DateOfBirth as varchar(10)) + '|' + CAST(T.Salary as varchar(20)))

--ON HASHBYTES('MD5 ', S.EmployeeNumber + '|' + S.FirstName + '|' + S.LastName + '|' + CAST(S.DateOfBirth as varchar(10)) + '|' + CAST(S.Salary as varchar(20))) =

-- HASHBYTES('MD5 ', T.EmployeeNumber + '|' + T.FirstName + '|' + T.LastName + '|' + CAST(T.DateOfBirth as varchar(10)) + '|' + CAST(T.Salary as varchar(20)))

AND T.Active = 1 -- Only compare open records

/***** 2 *****/

WHEN NOT MATCHED BY TARGET THEN -- Not found in destination

INSERT

( EmployeeNumber

, FirstName

, LastName

, DateOfBirth

, Salary

, Active

, DateFrom)

VALUES

( S.EmployeeNumber

, S.FirstName

, S.LastName

, S.DateOfBirth

, S.Salary

, 1 -- Activate the record

, GETDATE()) -- Open the record

/***** 3 *****/

WHEN NOT MATCHED BY SOURCE -- Not found in source

AND T.Active = 1 THEN -- Only compare open records

UPDATE

SET T.Active = 0 -- Inactivate record

, T.DateEnd = GETDATE() -- Close date

/***** 4 *****/

/***** REMOVED *****/

/***** 5 *****/

OUTPUT $action as MergeAction, Deleted.*, Inserted.Active as NewActiveCheck

/***** 6a *****/

) as MergeOutput WHERE MergeAction = 'UPDATE' and NewActiveCheck = 1;

Testing the script:

|

| One update |

Second test:

|

| One update, one delete and one insert |