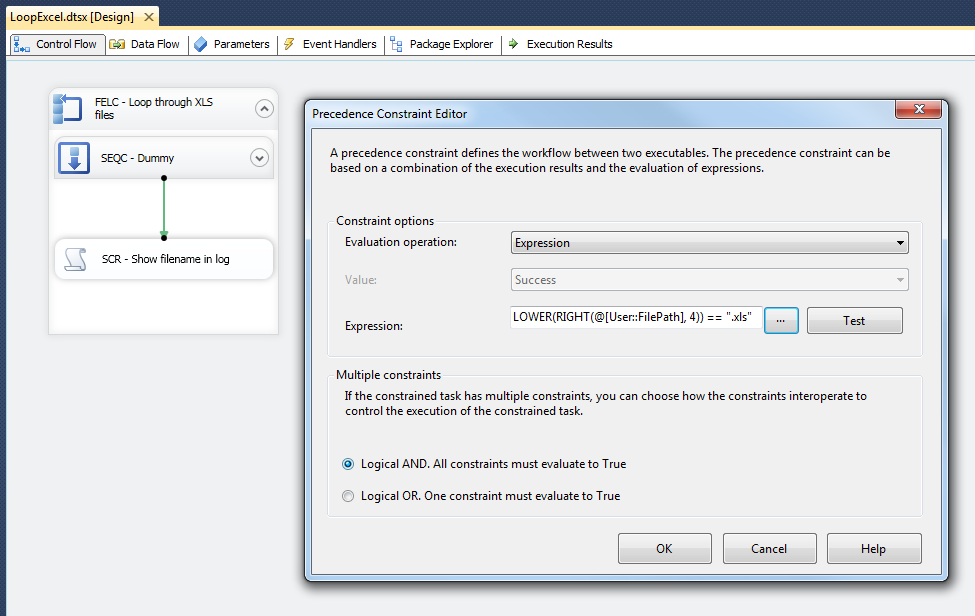

CaseI want to use the

WMI Event Watcher Task to watch for new files in SSIS, but it doesn't allow me to watch subfolders and after catching an event it continues with the next task, but meanwhile it doesn't watch for new files until in starts again. Is there an alternative?

![]() |

| WMI Event Watcher Task |

SolutionAn alternative could be to create a Windows Service that does the watching part and then executes a package when a new file arrives. For this example I used the full version of Visual Studio 2010 (SSDT BI is not enough). The example is in C#, but you can easily

convert that to VB.NET.

1) Windows Service ProjectStart Visual Studio and create a new Windows Service Project. I used the 4.0 framework since I want to execute SSIS 2012 packages. For 2008 you can use 3.5.

![]() |

| Windows Service 4.0 C# |

2) App.ConfigTo avoid hardcoded connection strings, paths and filters, we first have to add an Application Configuration File (App.config). Right Click the project in the solution explorer, choose Add, New Item... and then Application Configuration File. After adding the app.config we need to edit it. You can copy the XML below to start with and add extra parameters at the end. Make sure the values are correct.

![]() |

| app.config |

<?xml version="1.0" encoding="utf-8" ?>

<configuration>

<appSettings>

<add key="WatcherPath" value="d:\MySourceFiles"/>

<add key="WatcherFilter" value="*.csv"/>

<add key="SqlConnectionString" value="Data Source=.\SQL2012;Initial Catalog=master;Integrated Security=SSPI;"/>

<add key="Catalog" value="SSISDB"/>

<add key="Folder" value="MyFolder"/>

<add key="Project" value="MyProject"/>

<add key="Package" value="MyPackage.dtsx"/>

<add key="LogFile" value="d:\FileWatcherForSSIS\log.txt"/>

</appSettings>

</configuration>

![]() |

| MyCatalogStructure |

3) Add ReferencesRight click references in the Solution Explorer and choose Add Reference... For the configuration part we need to add two extra references that can be found in the .NET tab:

- System.Configuration.dll

- System.Configuration.Install.dll

![]() |

| Add References |

For executing the SSIS packages we need four extra references that can be found in the GAC (MSIL) folder. The exact place could vary, but here are mine for SSIS 2012. Use the browse tab in the Add Reference Window to add them.

C:\Windows\assembly\GAC_MSIL\Microsoft.SqlServer.ConnectionInfo\11.0.0.0__89845dcd8080cc91\Microsoft.SqlServer.ConnectionInfo.dll

C:\Windows\assembly\GAC_MSIL\Microsoft.SqlServer.Management.Sdk.Sfc\11.0.0.0__89845dcd8080cc91\Microsoft.SqlServer.Management.Sdk.Sfc.dll

C:\Windows\assembly\GAC_MSIL\Microsoft.SqlServer.Smo\11.0.0.0__89845dcd8080cc91\Microsoft.SqlServer.Smo.dll

C:\Windows\assembly\GAC_MSIL\Microsoft.SqlServer.Management.IntegrationServices\11.0.0.0__89845dcd8080cc91\Microsoft.SqlServer.Management.IntegrationServices.dll4) Services1.csNow open the source code of Services1.cs to add the actual Windows Services code. In the grey designer surface press F7 or click on the link to switch to code view. Copy the code below. If you don't want to use the Project Deployment Model then you must slightly change the

code in the watcher_Created method.

// C# code

using System;

using System.Collections.Generic;

using System.Collections.ObjectModel; // Added

using System.ComponentModel;

using System.Configuration; // Added

using System.Data;

using System.Data.SqlClient; // Added

using System.Diagnostics;

using System.IO; // Added

using System.Linq;

using System.ServiceProcess;

using System.Text;

using Microsoft.SqlServer.Management.IntegrationServices; // Added

namespace FileWatcherForSSIS

{

public partial class Service1 : ServiceBase

{

public Service1()

{

InitializeComponent();

}

/// <summary>

/// Method that executes when the services starts

/// </summary>

/// <param name="args"></param>

protected override void OnStart(string[] args)

{

// Create FileSystemWatcher dynamically instead of via the designer

FileSystemWatcher watcher = new FileSystemWatcher();

// Determine the folder you are watching

watcher.Path = ConfigurationManager.AppSettings["WatcherPath"];

// Determine for which files to watch

watcher.Filter = ConfigurationManager.AppSettings["WatcherFilter"];

// Determine whether you also want to watch subfolders

watcher.IncludeSubdirectories = true;

// Determine for which changes to watch (multiple notify

// filters should be seperated by a pipeline |

watcher.EnableRaisingEvents = true;

//watcher.NotifyFilter = NotifyFilters.CreationTime;

// Determine what to do for which events

watcher.Created += new FileSystemEventHandler(watcher_Created);

// Log start of service

logText("FileWatcher started watching " + watcher.Path);

}

/// <summary>

/// Method that executes when the services stops

/// </summary>

protected override void OnStop()

{

// Log start of service

logText("FileWatcher stopped watching ");

}

// Define the event handlers.

private static void watcher_Created(object sender, FileSystemEventArgs e)

{

// Log start of service

logText("FileWatcher catched " + e.FullPath);

// Connection to the database server where the packages are located

using (SqlConnection ssisConnection = new SqlConnection(ConfigurationManager.AppSettings["SqlConnectionString"]))

{

try

{

// SSIS server object with sql connection

IntegrationServices ssisServer = new IntegrationServices(ssisConnection);

// Get values from app.config

string catalog = ConfigurationManager.AppSettings["Catalog"];

string folder = ConfigurationManager.AppSettings["Folder"];

string project = ConfigurationManager.AppSettings["Project"];

string package = ConfigurationManager.AppSettings["Package"];

// The reference to the package which you want to execute

PackageInfo ssisPackage = ssisServer.Catalogs[catalog].Folders[folder].Projects[project].Packages[package];

// Create collection of parameters

Collection<PackageInfo.ExecutionValueParameterSet> executionParameter = new Collection<PackageInfo.ExecutionValueParameterSet>();

// Add a package parameter

executionParameter.Add(new PackageInfo.ExecutionValueParameterSet { ObjectType = 30, ParameterName = "MyFilePathParameter", ParameterValue = e.FullPath });

// Add a logging level parameter: 1=basic, 2=performance

executionParameter.Add(new PackageInfo.ExecutionValueParameterSet { ObjectType = 50, ParameterName = "LOGGING_LEVEL", ParameterValue = 1 });

// Execute package asynchronized and get

// the identifier of the execution

long executionIdentifier = ssisPackage.Execute(false, null, executionParameter);

// Prevent 30 second time-out by getting the execution

// by its ID and then wait until it's completed

ExecutionOperation executionOperation = ssisServer.Catalogs[catalog].Executions[executionIdentifier];

// Wait while package is busy

while (!(executionOperation.Completed))

{

// Refresh and wait 5 seconds before retesting

executionOperation.Refresh();

System.Threading.Thread.Sleep(5000);

}

// Log package completion

logText("Package completed " + package);

}

catch (Exception ex)

{

logText("FileWatcher error " + ex.Message);

}

}

}

/// <summary>

/// Write message to log

/// </summary>

/// <param name="logText">the log message</param>

private static void logText(string logText)

{

// Simple log version. You can replace it by for example an eventlog writer

using (FileStream fs = new FileStream(ConfigurationManager.AppSettings["LogFile"], FileMode.Append, FileAccess.Write))

using (StreamWriter sw = new StreamWriter(fs))

{

sw.WriteLine(DateTime.Now.ToString() + " - " + logText);

}

}

}

}

5) Installer.csTo make the Windows Services installable we need to add an Installer Class by right clicking the Solution Explorer and choose Add, New Item..

![]() |

| Installer.cs |

6) Add Service(Process)InstallerNext you can drag ServiceInstaller and ServiceProcessInstaller from the toolbox to the designer surface of install.cs. If they are not available in the toolbox then you need to add them manually by right clicking the toolbox and choose Choose Items... (just like in SSIS 2008 when adding Third Party tasks).

![]() |

| Service(Process)Installer |

7) Edit Service(Process)InstallerIn the properties of serviceInstaller1 you can set values for properties like ServiceName, DisplayName and the Description of the service.

![]() |

| Service Properties |

8) Building & DeployingNow build the project in release mode (via build menu or Ctrl + Shft + B) and go to the bin release folder of your project. Copy these files to a suitable location for the service.

![]() |

| Bin Release Folder |

9) InstallUtilNow open the Visual Studio 201X command prompt as Administrator (otherwise you don't have rights to add a new service). In the command prompt go to the folder where the service is located.

Then enter the following command:

installutil FileWatcherForSSIS.exe. During installation it will ask for the account that runs the service. The user must be able to read the folder and to execute the package (just like the user in a SQL Server Agent jobstep). For your production server without Visual Studio (and without

installutil.exe) you need to

create a setup project in Visual Studio to install your new Windows Services.

![]() |

| Visual Studio Command Prompt (run as Administrator) |

10) Start Service and testNow go to your local services and start the new Windows Service. Then start watch the log while adding a new file in the folder that you are watching.

![]() |

| The new Windows Service |

![]() |

| Log file |

Download Visual Studio 2010 Example ProjectNote: This Windows Service must be installed on the same machine as SSIS.